Most acquisition strategies assume that a click is a click and an install is an install. Performance teams judge traffic based on the same set of early metrics whether the source was in-app banners or search ads. Those assumptions work fine for typical in-app placements where users are already engaged and browsing. But when UA managers start buying traffic from on-device surfaces, those assumptions fall apart because user intent is fundamentally different.

Understanding how intent changes across surfaces is not a minor detail. It should change the way UA teams evaluate early metrics, design creatives, and think about the user journey.

The Beginning: Similar Actions, Different Moments of Intent

Consider two users in different moments:

– First, there is a person playing a mobile game. They see a native banner inside the experience, tap it, and install another app. That action is influenced by distraction, engagement, and immediate curiosity. The user was already in a content consumption state and open to clicking around.

– Second, there is someone setting up a brand new device. Before they even interact with menus or content, they are presented with app suggestions on system screens or in OEM app stories. That user is not browsing for entertainment. They are consciously making decisions about what the new device should be capable of.

– Both users may ultimately install an app. But the reasons behind the install are very different. In the first case, the install is guided by ongoing engagement and low attention cost. In the second case, the install is driven by an evaluative moment in which the user is making a choice about future utility and long-term use.

When intent is different, so is downstream behavior. The user who clicked inside a game may churn quickly if the product does not match their expectations. The user who installed from a system surface may open the app later, explore it more deliberately, and become more valuable over time.

The Middle: Why Intent Drives Metrics That Look “Weak” But Aren’t

Most click-through metrics, conversion rates, and early revenue models were developed for in-app and social placements. Those predictions assume that users who click are already “in the mood” to engage. They assume that after installation there will be an immediate or rapid spike in engagement or monetization.

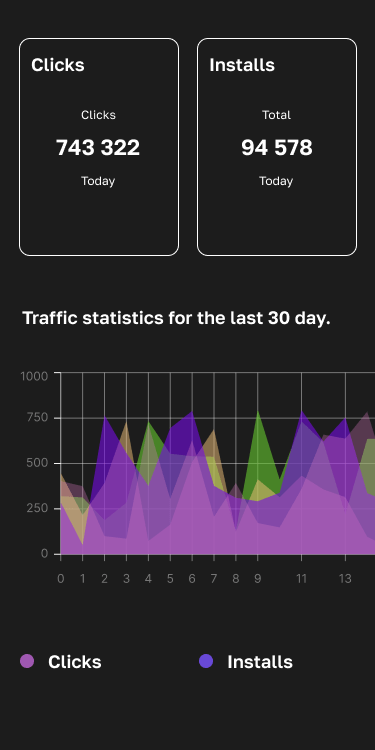

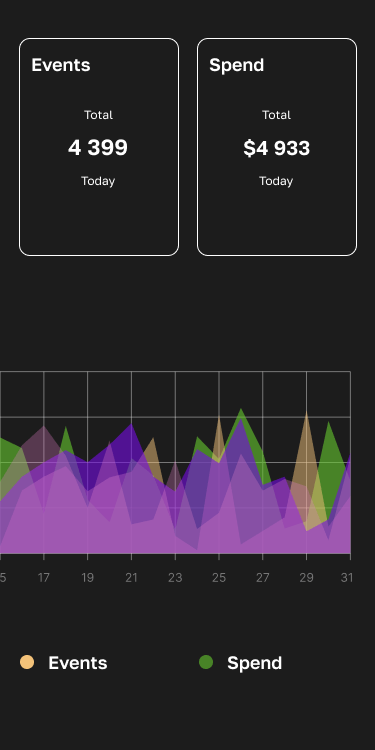

On-device traffic challenges these assumptions because users behave differently. They often install first and engage later. Monetization signals are slower to appear. Retention is steadier and does not always show early spikes.

This often leads to confusion among UA teams. Traffic can look slow based on day one or day three signals. Retention curves flatten instead of peaking. Early ARPU metrics seem lower than expected. Without context, it is easy to conclude that this traffic is weak.

But what appears weak in early windows might actually be a sign of deeper, more intentional engagement. Users acquired from on-device surfaces tend to take more time before forming a habit, yet their long-term value can be more consistent and reliable.

The root of the issue is not the quality of the traffic. The root of the issue is evaluating that traffic with the wrong model.

The End: Rethinking Performance Models for Intent-Driven UA

If on-device traffic requires a different model, then UA teams need to adapt what they consider success.

Here are practical shifts that help:

1. Stop judging on-device installs by in-app benchmarks.

Instead of expecting the same high early CTR or instant revenue, teams should look at longer engagement curves and event milestones that better reflect user intent.

2. Align creative messaging with context.

In-app creatives can lean into hooks and engagement cues because users are already in the mood to interact. On-device creatives should speak to utility, discovery, and value because users are making deliberate choices about what to put on their device.

3. Optimize toward meaningful events beyond install.

Rather than optimizing exclusively for install events, UA teams should prioritize optimization for onboarding completion, key in-app actions, and revenue events that correlate strongly with long-term value.

4. Use acquisition models that allow for delayed signals.

Cost-per-install and cost-per-action pricing models support delayed signal interpretation because payment happens only when an outcome is achieved, not when an impression occurs.

Platforms that incorporate these principles into their optimization logic, and that understand the difference in intent across surfaces, inherently perform better. For example, in systems like Qi Ads, machine learning can evaluate not only installs but also post-install signal patterns, helping the campaign optimize toward signals that reflect true user intent and real value.

Final Takeaway

Clicks and installs may look similar on the surface, but they do not mean the same thing across different acquisition surfaces. A banner click inside an app occurs in a very different context from an install initiated on a device setup screen. These differences in intent shape user behavior and alter the meaning of performance metrics.

By acknowledging that on-device does not equal in-app, UA managers can build models that reflect how users actually behave. This shifts optimization from chasing early spikes to understanding long-term value, and ultimately leads to smarter, more sustainable acquisition.